Key Takeaway:

Every development project in some way has to deal with hierarchical data. That comes in the form of catalogs, cascading choices etc. In my previous article I demonstrated a way to maintain simple 2 level hierarchical data. In this article I will demonstrated the most optimal way of storing deep hierarchical data, that cannot be efficiently maintained using key-value pair setup. The way to do this is by using a dedicated data type in SQL Server – HierarchyId.

The article will not go deep into explaining about hierarchyid or the methods that accompany it, but rather will show how to use the data type in real world scenarios.

Read On:

When you have to store a complex hierarchy, traversing and manipulating that hierarchy becomes a tedious task. Nested hierarchy that goes beyond a flat 2 tier level is better managed with the help of a dedicated data type in SQL Server – HierarchyId.

HierarchyId is an in-built data-type in SQL Server and is based on CLR representation of binary data. It essentially stores an ordered path in an efficient manner. Because it is a CLR based data-types, there are methods that are available that can be leveraged in SQL queries to work with the data type. Although it is based on CLR, you do not have to take any additional steps in SQL Server to enable the use of the data type. It was first introduced in SQL Server 2008.

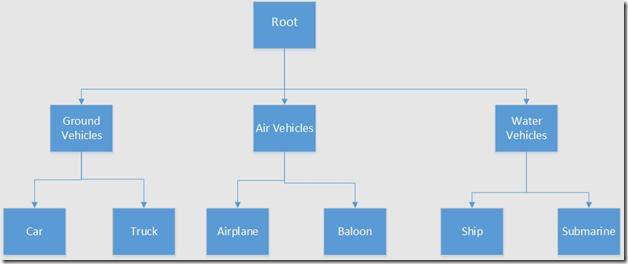

I will use some of the methods in this article to show you how to maintain a simple hierarchy. I will be re-using the example from my previous post and will try to store a hierarchy of different types of vehicles depending upon the environment in which they can be used.

Figure 1 Hierarchy of vehicles

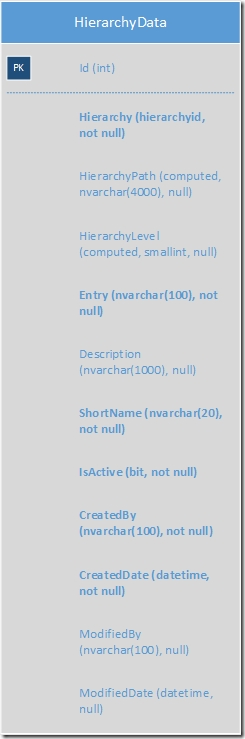

In order to accommodate such a hierarchy, let’s create a data model. The data model in our case will only consists of a single table. This table can then be connected to the rest of the tables in the main data model with the help of primary-foreign key relationship.

Figure 2 Table that will capture the hierarchy. Bold entries are required.

HierarchyData Table:

| Column Name | Data type | Description |

| Id | Int | The auto incrementing integer type primary key. |

| Hierarchy | Hierarchyid | Binary representation of ordered path using data type hierarchyid. |

| HierarchyPath | Computed | A computed column to show path of a node in the hierarchy as a string for our easy comprehension. |

| HierarchyLevel | Computed | A computed column to show level of a node in the hierarchy as am integer value for our easy comprehension. |

| Entry | Navarchar(100) | Entry denoting either a parent or child. |

| Description | Nvarchar(1000) | Description about entry. |

| ShortName | Nvarchar(20) | Short string about node which will be leveraged in where clause in SQL queries. |

| IsActive | Bit | Bit field denoting if an entry is active or not. |

| CreatedBy | Nvarchar(100) | Entry to denote who actually created the entry. |

| CreatedDate | Datetime | Entry denoting the date and time when the entry was created. |

| ModifiedBy | Nvarchar(100) | Entry to denote who modified the entry. |

| ModifiedDate | Datetime | Entry denoting the date and time when the entry was modified. |

Contrast this with the data model as presented in the previous article. You will notice that in this case we are only using a single table to store both the parent and the child entries.

As is done in the previous article, for our ease of querying, we will maintain a column called “ShortName” that will be used in where clause in all the queries. This makes the queries independent of the primary key column, in which the value may change as we move the data from one environment to another.

Now here’s the SQL that you can use to create the table and fill it up with data.

-- Creation of table

Create Table dbo.HierarchyData

(

Id int identity(1,1) primary key,

Hierarchy hierarchyid not null,

HierarchyPath as Hierarchy.ToString(),

HierarychyLevel as Hierarchy.GetLevel(),

Entry nvarchar(100) not null,

Description nvarchar(1000),

ShortName nvarchar(20) not null,

IsActive bit not null,

CreatedBy nvarchar(100) not null,

CreatedDate datetime not null,

ModifiedBy nvarchar(100) null,

ModifiedDate datetime null

);

-- Insertion of data

Declare

@Root hierarchyid,

@Parent hierarchyid,

@SiblingOnRight hierarchyid,

@SiblingOnLeft hierarchyid,

@HierarchyValue hierarchyid;

Set @Root = hierarchyid::GetRoot();

Insert into dbo.HierarchyData Values

(@Root,'Root Level','Root Level','ROOT', 1, 'Parakh Singhal',GetUtcDate(),null,null);

Select @HierarchyValue = @Root.GetDescendant(null,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Ground Vehicles','Ground Vehicles','GRND_VEH',1,'Parakh Singhal', GetUtcDate(),null,null);

Select @SiblingOnLeft = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH';

Set @HierarchyValue = @Root.GetDescendant(@SiblingOnLeft,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Air Vehicles','Air Vehicles','AIR_VEH',1,'Parakh Singhal', GetUtcDate(),null,null);

Select @SiblingOnLeft = Hierarchy from dbo.HierarchyData where ShortName = 'AIR_VEH';

Set @HierarchyValue = @Root.GetDescendant(@SiblingOnLeft,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Water Vehicles','Water Vehicles','WATER_VEH',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @HierarchyValue = Hierarchy.GetDescendant(null,null) from dbo.HierarchyData where ShortName = 'GRND_VEH';

Insert into dbo.HierarchyData values

(@HierarchyValue,'Trucks','Trucks','GRND_VEH_TRUCKS',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH';

Select @SiblingOnLeft = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH_TRUCKS';

Set @HierarchyValue = @Parent.GetDescendant(@SiblingOnLeft,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Cars','Cars','GRND_VEH_CARS',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @Parent = Hierarchy.GetDescendant(null,null) from dbo.HierarchyData where ShortName = 'AIR_VEH';

Set @HierarchyValue = @Parent;

Insert into dbo.HierarchyData values

(@HierarchyValue,'Hot Air Baloon','Hot Air Baloon','AIR_VEH_BALOON',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'AIR_VEH';

Select @SiblingOnLeft = Hierarchy from dbo.HierarchyData where ShortName = 'AIR_VEH_BALOON';

Set @HierarchyValue = @Parent.GetDescendant(@SiblingOnLeft,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Airplane','Airplane','AIR_VEH_AIRPLANE',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'WATER_VEH';

Set @HierarchyValue = @Parent.GetDescendant(null,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Ship','Ship','WATER_VEH_SHIP',1,'Parakh Singhal',GetUtcDate(),null,null);

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'WATER_VEH';

Select @SiblingOnLeft = Hierarchy from dbo.HierarchyData where ShortName = 'WATER_VEH_SHIP';

Set @HierarchyValue = @Parent.GetDescendant(@SiblingOnLeft,null);

Insert into dbo.HierarchyData values

(@HierarchyValue,'Submarine','Submarine','WATER_VEH_SUBMARINE',1,'Parakh Singhal',GetUtcDate(),null,null);

And here’s the SQL that you can use to query some of the most common scenarios like:

1. Traversing down the hierarchy from a parent node,

2. Traversing up the hierarchy from a child node,

3. Finding the level of a node in the hierarchy,

4. Determining is a node is the child of a node,

5. Finding the ancestor of a child node.

Declare

@Root hierarchyid,

@Parent hierarchyid,

@Child hierarchyid,

@Node hierarchyid;

-- Selecting the entire table contents.

Select * from dbo.HierarchyData;

-- Finding the root element.

Set @Root = hierarchyid::GetRoot();

Select * from dbo.HierarchyData where Hierarchy = @Root;

-- Climbing down a hierarchy

-- Selecting a hierarhcy of nodes belonging to a parent.

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH';

Select * from dbo.HierarchyData

where Hierarchy.IsDescendantOf(@Parent) = 1;

-- Climbing up the hierarchy

-- Selecting all the parents of a node

Select @Node = Hierarchy from dbo.HierarchyData where ShortName = 'WATER_VEH_SUBMARINE';

Select * from dbo.HierarchyData

where @Node.IsDescendantOf(Hierarchy) = 1;

-- Getting level of a node in the hierarchy.

Select Hierarchy.GetLevel() as HierarchyLevel from dbo.HierarchyData where ShortName = 'AIR_VEH_AIRPLANE';

-- Determining if a node is a child of a node.

Select @Parent = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH';

Select @Child = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH_TRUCKS'

Select @Child.IsDescendantOf(@Parent) as Result;

-- Determining an ancestor

-- The query selects the immediate ancestor.

Select @Node = Hierarchy from dbo.HierarchyData where ShortName = 'GRND_VEH_CARS';

Select * from dbo.HierarchyData where Hierarchy = @Node.GetAncestor(1);

Brief overview of some of the methods used in aforementioned sql queries:

1. GetRoot(): GetRoot methods when used on a hierarchyid type value will return the hierarchyid type value of the absolute root of the hierarchy.

2. GetDescendant(): Methods when used on a hierarchyid type value will return a hierarchyid type value. You can use this method to generate a new hierarchyid value when inserting data, the way I have demonstrated.

Notice that in order to create nodes that are siblings, you need to specify a pre-existing sibling (See the creation of water and air vehicles that are siblings to ground vehicles). Depending upon where you want to place the sibling in the hierarchy, you might need to provide both the sibling on the left and right in the hierarchy. Since I want to treat both air and water vehicles, I only mentioned sibling on the left in the hierarchy. If you want to place another category, say, amphibious vehicles between water and ground vehicles, then you will have to mention ground vehicles as the sibling on the left and water vehicles as the sibling on the right.

3. IsDescendantOf(): Method takes in a hierarchy id as input and returns a Boolean, if that is true.

4. GetAncestor(): Method takes in an integer value and gives the parent of the child node, depending on the integer value provided. The integer depicts the level in the hierarchy.

As you can see in the code above, maintaining a deep hierarchy with the help of hierarchyid is easy. It does not require cumbersome recursive Common Table Expressions and multiple tables to maintain, and yet offers more flexibility.

I have not gone deep into explaining about hierarchyid and the methods that accompany it. The main motive of this article is to demonstrate with the help of working code, how to maintain hierarchical data with the help of in-built mechanism in SQL Server.

References:

1. Model Your Data Hierarchies With SQL Server 2008

2. SQL Server 2008 - HierarchyID - Part I

3. Hierarchies with HierarchyID in SQL 2008