It's a lesson you should heed,

Try, try again.

- William Edward Hickson

Introduction

The domain of humanities inspires different facets of human life. We, geeks, picked up the ethos of perseverance and applied it to the domain of computer science, and, a pattern was born, aptly named “Retry Pattern”.

There is a class of errors called transient errors that are temporary but, interfere with the normal functioning and execution of commands in computers, resulting in errors that cannot be reliably reproduced and debugged. Transient errors, as the name suggests, are momentary and can appear due to different short-lived phenomena, both natural and man-made. Natural phenomena can range from high-energy neutron particles to the dislocation of integrated circuits on the motherboard of servers due to tremors. Man-made phenomena can range from un-intended network disruptions to servers frying themselves due to poor ventilation.

While today’s networks and servers have redundancies and scalability built in, they cost money. Rather than having your web service be served from one server, if you have to host it on two servers, to cater to redundancy, it will cost you twice to do so. Instead, a prudent idea is to include smarts in code that can handle transient errors rather than fail the application and create a mirage of server insufficiency. One of the patterns that can help achieve the objective is the Retry Pattern.

Retry pattern, as the name suggests, retries a desired action if the action fails. The number of retries can be programmed, and the retries can be spaced apart, to allow the server some time to recover. In .Net ecosystem, Polly remains the resilience framework of choice and provides many patterns which can help us bake resilience in our code against transient errors. Retry pattern is one of the available patterns.

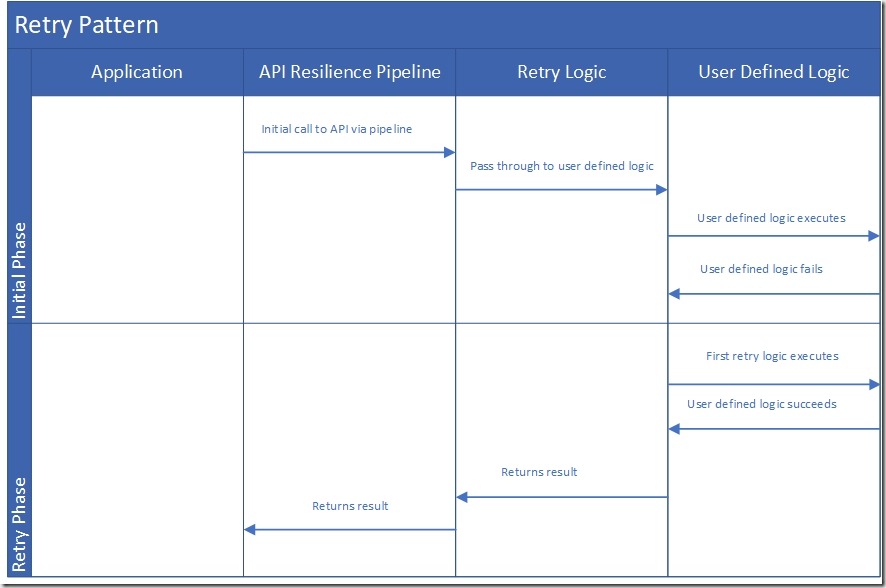

Figure 1 Retry Pattern

A resilience pattern in Polly V8 is known as a “strategy” and the usage of any strategy is carried out by making it as part of a resilience "pipeline". A pipeline can contain one or more resilience strategies. In other words, a resilience pipeline is a mechanism to combine one or more resilience patterns.

In this post I will show how to create different kinds of retry patterns as prescribed in various literature and as available in Polly.

Retry Patterns

Retry patterns can be implemented in the following ways:

1. Immediate retry pattern: Immediate retry pattern calls the failed service or dependency immediately without any delay between the original call and the subsequent retry calls. This pattern is generally applied in situations where the dependency seldom fails and response times are crucial to maintain a good user experience or evaluate the availability of service or dependency. Some examples would include a heartbeat service, disk write service in an operating system etc.

2. Constant wait and retry pattern: Constant wait and retry pattern introduces a constant delay between the original call and the subsequent retry calls to the service or dependency of interest. This is an appropriate pattern to be used where some congestion at a bottleneck resource may be the cause of failure of the original call, like a database. Usually, such issues resolve themselves in sometime after the original call was made.

3. Linear wait and retry pattern: Linear wait and retry pattern introduces a delay that grows at a linear pace. Thus, there will be an ever-increasing gap between the original and the subsequent retry calls made which, if graphed, would create a linear curve. This pattern is suitable to be deployed in public facing high traffic services, which can scale horizontally. The linearly increasing delay provides room for services to spin-up and come online. Examples include containerised services and serverless functions.

4. Exponential wait and retry pattern: Exponential wait and retry pattern introduces a delay between the original call and subsequent retry calls that grows exponentially. Thus, if the delay is graphed, it would create an exponential curve. This pattern is suitable for high traffic services which can scale horizontally, with the exponential delay allowing them to come online.

General outline to implement a resilience pattern

Polly allows us to use all the aforementioned patterns. The general outline to utilize a pattern in Polly V8 is as follows:

1. Create attributes compliant with the desired resilience pattern.

2. Create a pipeline using the options defined in step 1. Optionally, a combination of pipelines can be created at this stage.

3. This step is optional. Create a pipeline registry, which caches the frequently used pipelines and allows an instance of a registry to call a pipeline based on the name provided.

Example

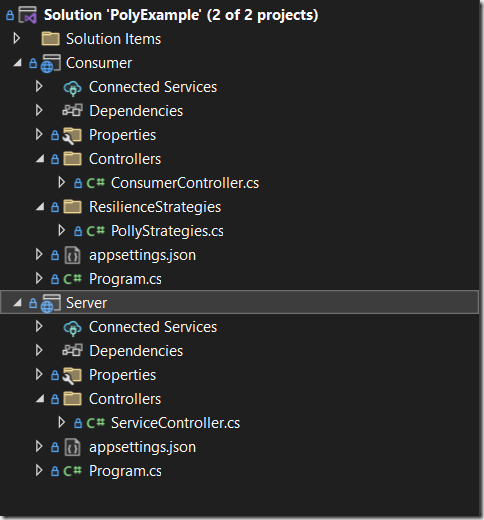

In the example provided I have created two ASP.Net Web API projects, where one API project acts as a server and serves a string result. It has been configured to randomly pass and fail incoming requests based on a random number generated for every incoming request.

The second API project acts as a consumer, and this is where we will implement resilience strategies. The consumer API project sends a request to the server project simulating a machine-to-machine communication and, if the sent request fails, then a resilience strategy will come into picture and will execute one of the retry patterns programmed.

Server Project: First program a controller in the server project with the code shown below. This will serve a string response based on a randomly calculated number.

using Microsoft.AspNetCore.Mvc;

namespace Server.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class ServiceController : Controller

{

[HttpGet]

[Route("")]

public IActionResult ServiceEndpoint()

{

Random random = new Random();

int dice = random.Next(1, 100);

if (dice < 30)

{

return Ok("Call succeeded. Dice rolled in your favour.");

}

else if (dice > 30 && dice < 50)

{

return StatusCode(StatusCodes.Status502BadGateway, "Bad gateway.");

}

else if(dice > 50 && dice < 70)

{

return StatusCode(StatusCodes.Status500InternalServerError, "Internal server error.");

}

else

{

return StatusCode(StatusCodes.Status408RequestTimeout, "Request Timeout.");

}

}

}

}

Consumer Project: Create the Consumer project structure as shown in the image previously. In the “ResilienceStrategies” folder, create a class named “PollyStrategies.cs”. Inject an instance of this class as a singleton in the services section of the Program.cs class. This way whether we expose individual resilience pipelines or a pipeline registry, only a single instance or pipeline or registry will remain available throughout the application domain.

Now, we program the strategies. We will restrict ourselves only to different flavours of retry pattern in this port. The following are the requirements for the resilience strategies:

1. The strategy should be able to retry if the response returned by the server happens to be related to something going wrong at the server. This means that the retry pattern should come into action if the http status code returned by the server happens to be in the 500 series.

2. The strategy should be able to handle a timeout and a request exception.

3. Various flavours of retry pattern should be available - immediate retry, constant wait and retry, linear wait and retry, and exponential wait and retry.

To fulfil the requirements, we declare instances of resilience pipelines as properties and mark their setters as private. This way, they cannot be modified outside the class in which they are declared.

Next, we need to declare resilience strategy options that satisfy the requirements provided. Since they are not required to interact with the outside world, we declare those property instances as private.

public ResiliencePipelineRegistry<string> StrategyPipelineRegistry { get; private set; }

public ResiliencePipeline<HttpResponseMessage>? ImmediateRetryStrategy { get; private set; }

private RetryStrategyOptions<HttpResponseMessage>? immediateRetryStrategyOptions;

public ResiliencePipeline<HttpResponseMessage>? ConstantDelayRetryStrategy { get; private set; }

private RetryStrategyOptions<HttpResponseMessage>? constantDelayRetryStrategyOptions;

public ResiliencePipeline<HttpResponseMessage>? LinearWaitAndRetryStrategy { get; set; }

private RetryStrategyOptions<HttpResponseMessage>? linearWaitAndRetryStrategyOptions;

public ResiliencePipeline<HttpResponseMessage>? ExponentialWaitRetryStrategy { get; set; }

private RetryStrategyOptions<HttpResponseMessage>? exponentialWaitRetryStrategyOptions;

Next, we declare an array of HTTP status codes. If the response from the server matches with one of the http status codes in the array, then a retry response from the consumer API will get triggered.

HttpStatusCode[] httpStatusCodesWorthRetrying = new HttpStatusCode[] {

HttpStatusCode.RequestTimeout,// 408

HttpStatusCode.InternalServerError, // 500

HttpStatusCode.BadGateway, // 502

HttpStatusCode.ServiceUnavailable, // 503

HttpStatusCode.GatewayTimeout // 504

};

To define the options corresponding to the required patterns, we declare and define a method called “InitializeOptions”. A brief explanation of the attributes is as follows:

a) MaxRetryAttempts: Specifies the max number of retries before the strategy gives up.

b) BackoffType: Specifies the core type of retry pattern - constant, linear or exponential wait and retry pattern.

c) Delay: Defines the type of delay that needs to be introduced between the retry calls. Marking it zero for a constant type BackoffType attribute, makes the strategy an immediate retry strategy. Note that this will not be defined in the other strategy options and default options will be used.

d) ShouldHandle: The most important attribute in options. This defines how the retry pattern should handle a failed response from the server. A structured way to organize this attribute is with the help of PredicateBuilder class object. Defined using the Linq syntax, it has various methods which help us in performing various duties from handling exceptions to handling certain kinds of results.

e) OnRetry: An attribute in options that defines a callback which can be executed on a retry attempt. Note, that OnRetry callback does not execute on the original call, only the retry call.

private void InitializeOptions()

{

immediateRetryStrategyOptions = new RetryStrategyOptions<HttpResponseMessage>()

{

MaxRetryAttempts = 10,

BackoffType = DelayBackoffType.Constant,

Delay = TimeSpan.Zero,

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(response => httpStatusCodesWorthRetrying.Contains(response.StatusCode))

.Handle<HttpRequestException>()

.Handle<TimeoutRejectedException>(),

OnRetry = async args => { await Console.Out.WriteLineAsync("ImmediateRetry - Retrying call..."); }

};

constantDelayRetryStrategyOptions = new RetryStrategyOptions<HttpResponseMessage>()

{

MaxRetryAttempts = 10,

BackoffType = DelayBackoffType.Constant,

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(response => httpStatusCodesWorthRetrying.Contains(response.StatusCode))

.Handle<HttpRequestException>()

.Handle<TimeoutRejectedException>(),

OnRetry = async args => { await Console.Out.WriteLineAsync("ConstantRetry - Retrying call..."); }

};

linearWaitAndRetryStrategyOptions = new RetryStrategyOptions<HttpResponseMessage>()

{

MaxRetryAttempts = 10,

BackoffType = DelayBackoffType.Linear,

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(response => httpStatusCodesWorthRetrying.Contains(response.StatusCode))

.Handle<HttpRequestException>()

.Handle<TimeoutRejectedException>(),

OnRetry = async args => { await Console.Out.WriteLineAsync("WaitAndRetry - Retrying call..."); }

};

exponentialWaitRetryStrategyOptions = new RetryStrategyOptions<HttpResponseMessage>()

{

MaxRetryAttempts = 10,

BackoffType = DelayBackoffType.Exponential,

ShouldHandle = new PredicateBuilder<HttpResponseMessage>()

.HandleResult(response => httpStatusCodesWorthRetrying.Contains(response.StatusCode))

.Handle<HttpRequestException>()

.Handle<TimeoutRejectedException>(),

OnRetry = async args => { await Console.Out.WriteLineAsync("ExponentialRetry - Retrying call..."); }

};

}

Once we have defined the options that we need to associate with strategies, we initialize the pipelines, by using the appropriate strategy and corresponding strategy option. This is done in the “InitializePipelines” method.

private void InitializePipelines()

{

ImmediateRetryStrategy = new ResiliencePipelineBuilder<HttpResponseMessage>().AddRetry<HttpResponseMessage>(immediateRetryStrategyAsyncOptions).Build();

ConstantDelayRetryStrategy = new ResiliencePipelineBuilder<HttpResponseMessage>().AddRetry<HttpResponseMessage>(constantDelayRetryStrategyAsyncOptions).Build();

LinearWaitAndRetryStrategy = new ResiliencePipelineBuilder<HttpResponseMessage>().AddRetry<HttpResponseMessage>(linearWaitAndRetryStrategyAsyncOptions).Build();

ExponentialWaitRetryStrategy = new ResiliencePipelineBuilder<HttpResponseMessage>().AddRetry<HttpResponseMessage>(exponentialWaitRetryStrategyAsyncOptions).Build();

}

Now, we have two options to utilize the resilience pipelines - Either use the pipelines exposed as publicly obtainable properties via an instance of the “PollyStrategies” class, or use a resilience pipeline registry type property to access all the instances of the resilience pipelines. The resilience registry offers some benefits like, automatic caching of recently used pipelines and automatic management of resources associated with resilience pipelines.

In the example code I have created a resilience pipeline registry, but, it is optional and, you can choose to ignore it and opt to work with individual resilience pipeline instances accessible via properties.

private void RegisterPipelines()

{

StrategyPipelineRegistry = new ResiliencePipelineRegistry<string>();

StrategyPipelineRegistry.TryAddBuilder<HttpResponseMessage>("ImmediateRetry", (builder, context) =>

{

builder.AddPipeline(ImmediateRetryStrategy);

});

StrategyPipelineRegistry.TryAddBuilder<HttpResponseMessage>("ConstantRetry", (builder, context) =>

{

builder.AddPipeline(ConstantDelayRetryStrategy);

});

StrategyPipelineRegistry.TryAddBuilder<HttpResponseMessage>("WaitAndRetry", (builder, context) =>

{

builder.AddPipeline(LinearWaitAndRetryStrategy);

});

StrategyPipelineRegistry.TryAddBuilder<HttpResponseMessage>("ExponentialRetry", (builder, context) =>

{

builder.AddPipeline(ExponentialWaitRetryStrategy);

});

}

Later in the code, you will be able to see both the use cases, viz. usage of pipelines via properties and via pipeline registry.

Now, we will be coding our controller which will implement resilience code.

using Consumer.ResilienceStrategies;

using Microsoft.AspNetCore.Mvc;

using System.Net;

namespace Consumer.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class ConsumerController : Controller

{

private readonly IHttpClientFactory httpClientFactory;

private readonly PollyStrategies pollyStrategies;

public ConsumerController(IHttpClientFactory _httpclientFactory, PollyStrategies _pollyStrategies)

{

httpClientFactory = _httpclientFactory;

pollyStrategies = _pollyStrategies;

}

public IActionResult ConsumerEndPoint()

{

string url = "http://localhost:5106/api/service";

HttpClient client = httpClientFactory.CreateClient();

//HttpResponseMessage response = pollyStrategies

// .StrategyPipelineRegistry.GetPipeline<HttpResponseMessage>("ImmediateRetry")

// .Execute(() => client.GetAsync(url).Result);

HttpResponseMessage response = pollyStrategies

.StrategyPipelineRegistry.GetPipeline<HttpResponseMessage>("ConstantRetry")

.Execute(() => client.GetAsync(url).Result);

//HttpResponseMessage response = pollyStrategies

// .StrategyPipelineRegistry.GetPipeline<HttpResponseMessage>("WaitAndRetry")

// .Execute(() => client.GetAsync(url).Result);

//HttpResponseMessage response = pollyStrategies

// .StrategyPipelineRegistry.GetPipeline<HttpResponseMessage>("ExponentialRetry")

// .Execute(() => client.GetAsync(url).Result);

//HttpResponseMessage response = pollyStrategies.ImmediateRetryStrategyAsync.Execute<HttpResponseMessage>(() => client.GetAsync(url).Result);

//HttpResponseMessage response = pollyStrategies.WaitAndRetryStrategyAsync.Execute(() => client.GetAsync(url).Result);

//HttpResponseMessage response = pollyStrategies.ExponentialWaitRetryStrategyAsync.Execute(() => client.GetAsync(url).Result);

//HttpResponseMessage response = client.GetAsync(url).Result;

if (response.StatusCode == HttpStatusCode.OK)

{

return Ok("Server responded");

}

else

{

return StatusCode((int)response.StatusCode, "Problem happened with the request") ;

}

}

}

}

Following the best practices, we create an instance of an http client and PollyStrategies class via constructor injection. Please note that the http client is a simple client without any modification done or any Polly policy (as it was known as in V7) injected directly into the http client.

The code in the action method is self-explanatory. In order to play with the different resilience strategies, uncomment the code and see how they react. You can use Fiddler or Postman to play with the ConsumerEndPoint action method. The resilience strategy in action can be seen in the console window of the consumer API project.

Immediate Retry Pattern: Immediately fires a retry request back to the server API project, due to a combination of BackoffType as Constant and Delay given to be TimeSpan.Zero.

Constant Retry Pattern: By default, sends a retry request spaced apart by 1 second. More info can be found here.

Linear Retry Pattern: Sends retry requests spaced apart by a linearly increasing time gap. The time gap increases by 1 second by default. More information can be found here.

Exponential Retry Pattern: Sends retry requests spaced apart by exponentially increasing time gap. The time gap increases by a factor of 2^n where n is the number of attempts, increasing by a factor of 1. More information can be found here.

You can combine the resilience strategies into a pipeline. Hope this simple example of introduction to retry resilience pattern was helpful.

References:

1. Polly documentation: https://www.pollydocs.org/strategies/retry.html

2. Azure Architecture: https://learn.microsoft.com/en-us/azure/architecture/patterns/retry

3. ThinkMicroServices: http://thinkmicroservices.com/blog/2019/retry-pattern.html