Key takeaway:

Frequently there are scenarios here you might be required to key in multiple rows into a SQL Server database table. In order to do that with minimum number of calls and a low memory footprint, there are several approaches available. I know of three, of which I am presenting the comma separated values (CSV) list approach.

Read on:

A few days ago, I ran into a requirement that required me to insert multiple rows of data into a table in a SQL Server database. There are a couple of approaches to do this and I am planning to do a series on this. Some of the approaches to do this are the following:

1. Passing a comma separated value (CSV) list to the database and parsing it at the database level with the help of a user defined function to split the list into rows and inserting those rows.

2. Passing the data as XML hierarchy,

3. Using Table Valued Functions, a feature that SQL Server supports version 2008 onwards.

All the three approaches are valid approaches and usage depends upon the kind of constraints that you are operating with. I was dealing with a legacy codebase, that did not afforded me the luxury of table valued parameters, which is the most straight forward and efficient way of accomplishing this, and had to settle for the CSV list and eventually the XML way.

In this post I will be covering the CSV list method. The comma separated values list method requires the following main ingredient: a user defined function that can be used to split text on the defined delimiter. Since this post is all about demonstrating the comma separated values list approach, I will not delve into the internal mechanics of such function. I just grabbed the first function that I could get off of the internet, and made it work.

I got the split function from the following url: SQL Server Forums - Best split function courtesy of the member Corey alias Seventhnight.

The technique works on the following strategy:

1. Parse the information that needs to be stored in the database in the form of a CSV list.

2. Pass it to the database packed in an appropriate type of parameter.

3. De-serialize the information into a table variable with the help of the aforementioned splitter function.

4. Depending upon the total number of columns in the target table, run a combination of insert and update commands, fetching the information from the table variable. A combination is made this way in order to minimize the disk activity, and complete the operation in as short duration as possible.

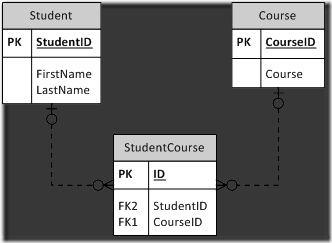

To demonstrate I will be using a very simple data model consisting of three tables: Student, Course and an association table supporting many-to-many relationship between Student and Course, StudentCourse. The Entity-Relationship diagram will clarify the relationship between the tables:

According the ER diagram, a student can take many courses and a course can be taken by many students. The insertion of courses that a student is interested in the association table is an ideal application of this technique.

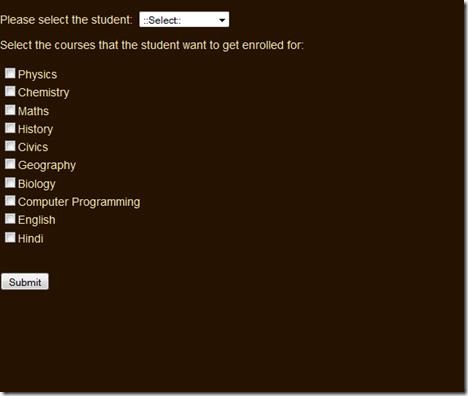

The following ASP.NET webform that is the web front end that we will use to form a complete example to demonstrate this approach:

The coding for the webpage is very simple. The pseudo-code is as follows:

1. Select a student from the drop down.

2. Select from the available courses that the student needs enrollment for.

3. Click on the submit button.

I am going to leave it up to the reader to understand the programming in the web application. It is straight forward and the domain model powering the application is a reflection of the data model depicted above.

All the magic happens in the stored procedure powering the enrollment of a student in one of the listed courses. The stored procedure is as follows:

1: CREATE PROCEDURE [dbo].[EnrollStudentInCourses]

2:

3: @StudentID int,

4:

5: @Courses nvarchar(max)

6:

7: AS

8:

9: BEGIN

10:

11: Declare @TempStudentCourseTable Table

12:

13: (

14:

15: StudentID int null,

16:

17: CourseID int null

18:

19: );

20:

21: Insert into @TempStudentCourseTable (CourseID)

22:

23: Select CONVERT(int,Data) from dbo.Split(@Courses,',');

24:

25: Update @TempStudentCourseTable Set StudentID = @StudentID;

26:

27: Insert into dbo.StudentCourse (StudentID,CourseID)

28:

29: Select StudentID,CourseID from @TempStudentCourseTable;

30:

31: END

32:

33: GO

34:

The pseudo-code for the stored procedure is as follows:

1. There are two parameters in the stored procedure – The student ID of the student, who needs to be enrolled, and the courses passed as an nvarchar(max) parameter representing a CSV list of the courses in which the enrollment needs to be done.

2. The procedure uses a table variable that mimics the table structure of the StudentCourse table, sans the primary key.

3. Insert command is carried out on the table variable, with the CSV list comprised of the courses passed to the splitter function. Since out splitter function returns a table, with two columns – ID (int type identity column), and Data (nvarchar type), we can right away leverage the Data field, and insert it into the table variable by carrying out required conversion on the fly. Here I am converting the Data to integer, since CourseID is of type int.

4. Once the insertion has been done, I update the StudentID field in the table variable with the passed parameter value.

5. Once this is complete, I ran the Insert…Select… command to key in data into the final StudentCourse table. Depending upon the settings in the database, this will be a minimally logged operation, thereby giving you speed benefits.

Note that this is an overly simple example of the stored procedure, not employing transaction, error handling etc. and is meant to convey the concept of usage of comma separated values list in inserting multiple rows.

Emerging pattern:

If you look at this and carry it forward to more complicated use cases, then you’ll see a pattern emerging out. The pattern is:

1. Model the table variable after the final table that is supposed to receive the data.

2. Insert the most variable information in the table variable first.

3. Follow up the insertion with updation of table variable with information that is not changing much, like the StudentID in this example. It remains constant for all the courses inserted into the table variable.

4. If you need selective action within the table variable, make use of an integer type identity column. In this example, we did not require that.

5. The complexity of the procedure will depend upon the total columns in the final table. The more the columns, the more the number of update commands. There will also be a loop that you will have to manage in order to update the table variable with the right row form the table emitted by the splitter function.

In the next post I will cover how to insert multiple rows in SQL Server database using XML.

The sample code for this post consists of the web application and the powering database. Download it from: